In traditional SEO, we optimize for keywords.

In AI SEO, we optimize for concepts and intent layers.

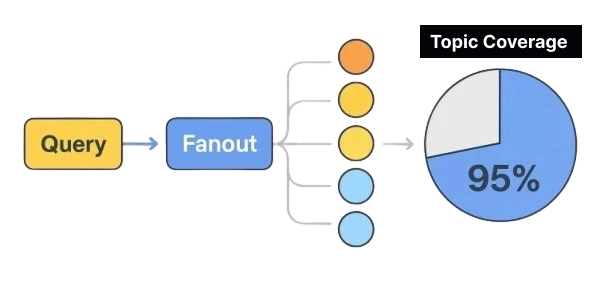

That’s why at LLMClicks, we use multi-layer fanout query generation — expanding 10–20 GSC keywords into 150–240 semantically rich, AI-ready prompts — covering 95% of the topic ecosystem.

But generating queries is only half the story.

The real win comes when you turn those queries into structured, AI-optimized content.

Here’s exactly how 👇

Start with your real search data from Google Search Console.

Filter top queries by clicks, impressions, and position (≤50).

Cluster semantically and classify by intent (Informational, How-to, Transactional, Comparison, Local).

Use LLMClicks’ Multi-Layer Fanout Engine to expand each cluster into:

Core queries (main topic)

Adjacent topics

Problem–solution queries

Related ecosystem concepts

🎯 Output: 150–240 high-quality LLM-style queries per topic

→ This is your topic universe — what AI and humans are really asking about your niche.

That’s the new challenge facing insurers in the age of AI-first discovery.

Next, LLMClicks automatically groups queries into content templates based on semantic proximity and intent type.

For example:

| Query Layer | Content Format Blueprint | Goal |

|---|---|---|

| Informational | Blog post / Knowledge Hub | Awareness & topical authority |

| How-to | Step-by-step Guide / Checklist | Search intent depth |

| Transactional | Comparison / Product Page | Conversion-focused |

| Problem–Solution | Troubleshooting / Use Case | High user engagement |

| Adjacent Topics | Cluster article / Internal link target | Semantic reinforcement |

🎯 Output: Structured content map with recommended content types for each intent layer.

Now comes the AI part.

Each query cluster becomes a content prompt input for your LLM or internal content agent.

Example prompt in LLMClicks Agent:

“Write a detailed article addressing the following related user queries:

How to fix duplicate business citations

Why are my business citations inconsistent

How to clean up local listings

Focus on clarity, include stats, and cite authoritative sources.”

💡 The agent uses your fanout query clusters as topic scaffolds, ensuring that every angle of the topic is covered.

🎯 Result: Each article covers multiple semantic layers → feeding back into the 95% topic coverage metric.

Every piece of content generated is then:

Mapped to its fanout query cluster (Query → Page mapping)

Checked with our On-Page AI Audit (meta, headings, schema, entity density, etc.)

Scored for Semantic Coverage % — how well it covers its cluster queries

If a page covers <70% of its cluster queries, the system recommends:

Adding missing sections

Integrating FAQs

Expanding internal links to related concepts

🎯 Goal: Every page becomes an LLM-friendly, multi-intent hub — not just an article.

Once pages go live:

LLMClicks tracks citations and mentions across ChatGPT, Perplexity, Claude, etc.

Shows AI Visibility Gains per Query Cluster

Identifies new “cold” queries (topics where you’re not cited yet)

→ Feed those cold queries back into the Fanout Engine

→ Generate new supporting content

This creates a closed-loop, self-optimizing system — from queries → content → AI visibility → new queries.

| Stage | What Happens | Outcome |

|---|---|---|

| 1️⃣ Generate Queries | Multi-layer fanout (core + adjacent + problem + related) | 150–240 high-quality prompts ” |

| 2️⃣ Cluster Intelligently | Intent + semantic grouping | Clear content structure |

| 3️⃣ Generate Content | Feed clusters to AI/agents | AI-optimized pages |

| 4️⃣ Audit & Map | On-page + Query-to-Page | Quality & coverage control |

| 5️⃣ Measure & Evolve | Track citations & re-optimize | 95%+ topic coverage over time |

Fanout Queries aren’t just for keyword expansion — they’re for topic domination.

By pairing multi-layer fanout generation with content clustering and AI optimization, you can build an interconnected content ecosystem that LLMs recognize as authoritative.

That’s how LLMClicks helps brands move from keyword visibility → AI visibility.

© LLMClicks.ai All Right Reserved 2026.