For more than two decades, SEO has revolved around one central truth:

Older domains with strong backlink profiles perform better.

That belief has shaped how websites are built, how content is produced, and how authority is earned.

But with the rise of LLM-driven search engines—ChatGPT, Perplexity, Claude, and Google’s AI Overviews—an entirely different question emerges:

Do LLMs evaluate websites the same way Google does?

Or have the rules of ranking changed completely?

To answer this, we ran a controlled experiment:

We launched a brand-new domain with:

Domain registered: citationmatrix.com

Domain register date 23 Nov 2025

WordPress Installed – 25 Nov 2025

Topical Map Created using Cgtgpt – 25 Nov 2025

Content created chtgpt batch feature – 27 Nov 2025

Imported content to WordPress – 27,28 Nov 2025

Site submitted to GSC -1 Dec 2025

Imported content to WordPress – 27,28 Nov 2025

Site submitted to GSC -1 Dec 2025

We weren’t looking for “SEO results.”

We wanted to measure LLM results—how the AI search ecosystem interprets, recalls, ranks, and cites newly created content.

The results dismantled several long-held assumptions—and confirmed something groundbreaking:

To ensure the results were pure and uncontaminated by traditional SEO advantages, we followed a strict protocol.

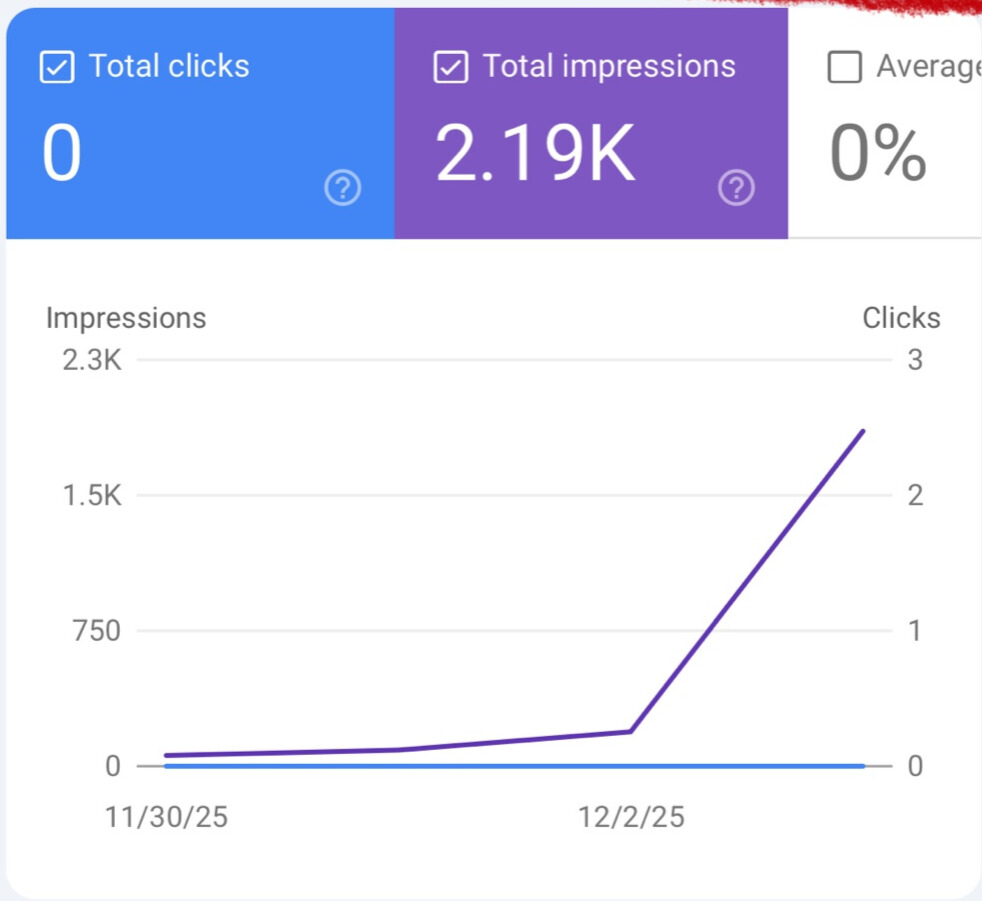

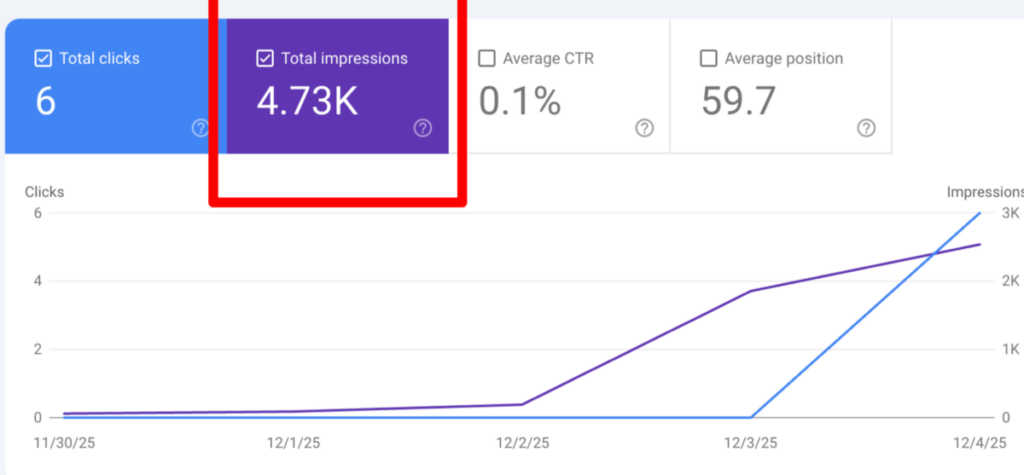

Yet, within 41 hours of going live…

This early spike alone suggests Google’s algorithms are shifting toward semantic interpretation, not historical authority.

We built 72 long-form articles using a high-precision, LLM-optimized content generation system:

✔ Automatic internal linking

✔ CTA block injection

✔ Gutenberg-ready HTML

✔ Block-based layout

✔ Section anchors

✔ Entity enrichment

✔ Semantic reinforcement patterns

✔ Hierarchical header structure

✔ Silo-based link graph mapping

✔ FAQs (when applicable)

✔ Micro-snippets for LLM snippet extraction

This structure was intentionally created to test a hypothesis:

If LLMs reward semantic clarity, structure, and topical completeness, then backlinks and domain age may matter far less.

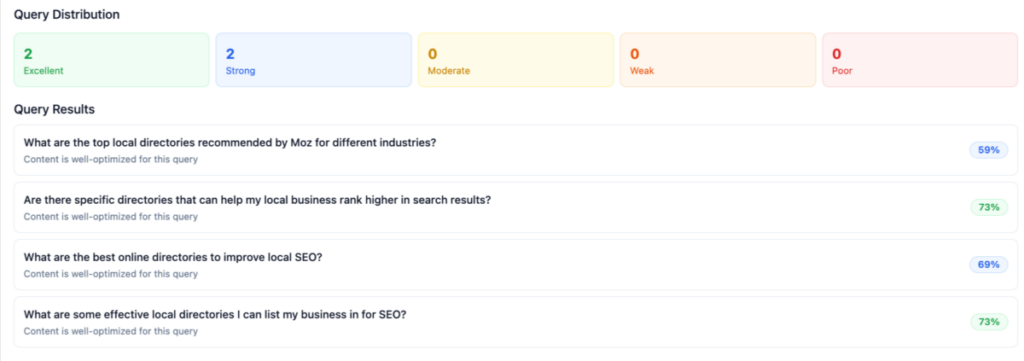

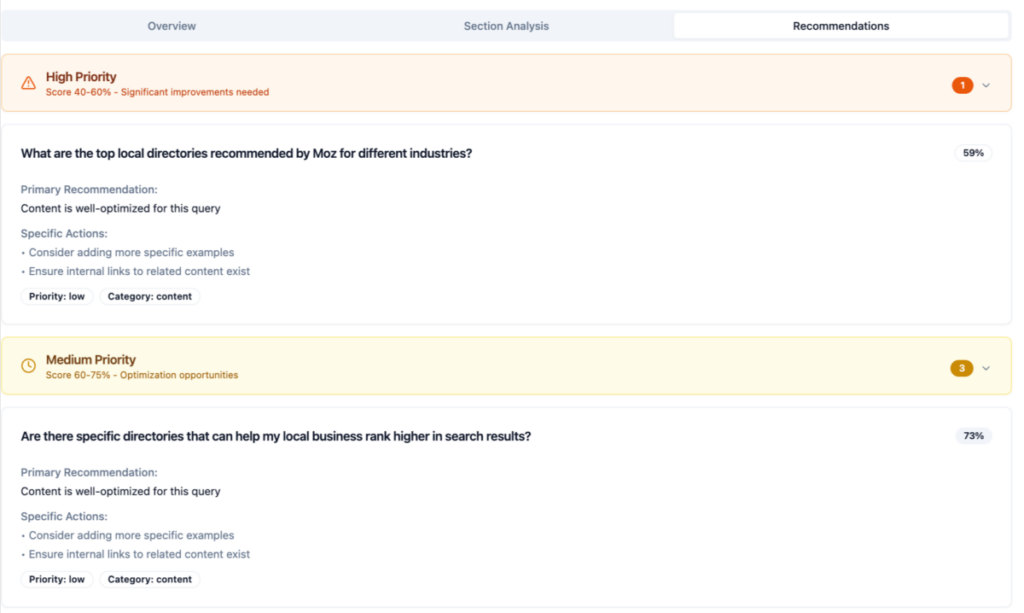

To accurately measure LLM ranking behavior, we needed a query dataset that reflected real user intent, not synthetic prompts.

Because the domain was new, we relied on two complementary systems inside LLMClicks:

Together, these produced a realistic, multi-intent evaluation dataset.

Even though the domain was new and GSC data was limited, LLMClicks was able to extract meaningful early insights.

LLMClicks analyzed early GSC impressions and allowed us to:

Informational

Transactional

How-To

Comparison

Navigational

This ensured that our foundation was not artificial—

our queries reflected how real users were already discovering or interpreting the content.

Because GSC data was limited, we supplemented it with a second, more comprehensive method:

LLMClicks’ Content Analysis engine examined every article and:

Entity combinations

Intent categories

Topic relationships

Subtopic relevance

This ensured every page had a semantically aligned set of seed queries—even if GSC data was insufficient.

LLMClicks evaluates content using high-dimensional embeddings.

For each query:

Low-similarity pages were sent to LLMClicks’ Content Analysis engine to detect:

This embedding-driven scoring allowed us to measure semantic authority, independent of backlinks or domain age.

Every LLM test prompt—across ChatGPT models, Perplexity, and others—was logged via

LLMClicks Prompt Tracker:

This created a complete longitudinal map of LLM behavior

Before running the experiment, we documented four hypotheses:

Our findings contradicted all four hypotheses.

This is where everything gets interesting.

Despite:

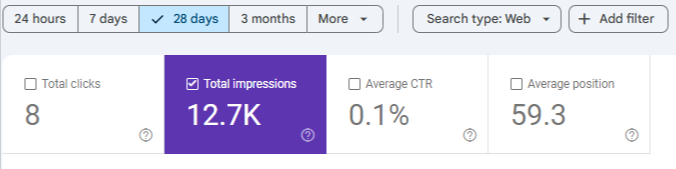

Google indexed us quickly and delivered:

This suggests Google’s early evaluation relies more heavily on:

…rather than historical factors.

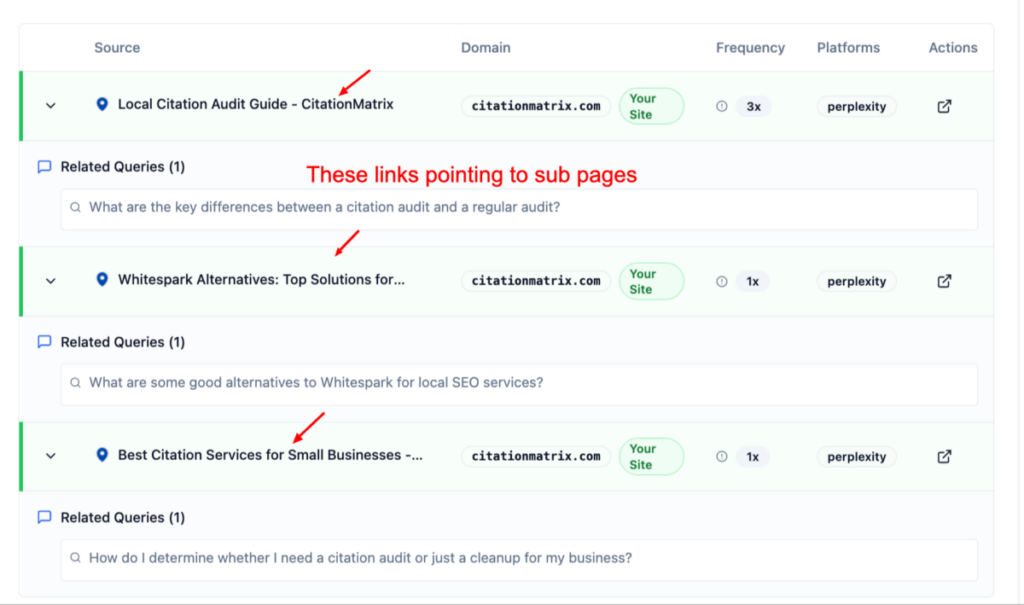

Perplexity demonstrated the most surprising behavior:

Even more surprising…

This proves LLMs rely on semantic density, not FAQ formatting.

This proves that ChatGPT:

Despite OpenAI’s claim that 4.1 is updated only until June 2024, our 2025 content still:

Which suggests:

This is one of the most important observations in the entire experiment.

This single insight reshapes our understanding of how LLMs evaluate and rank content:

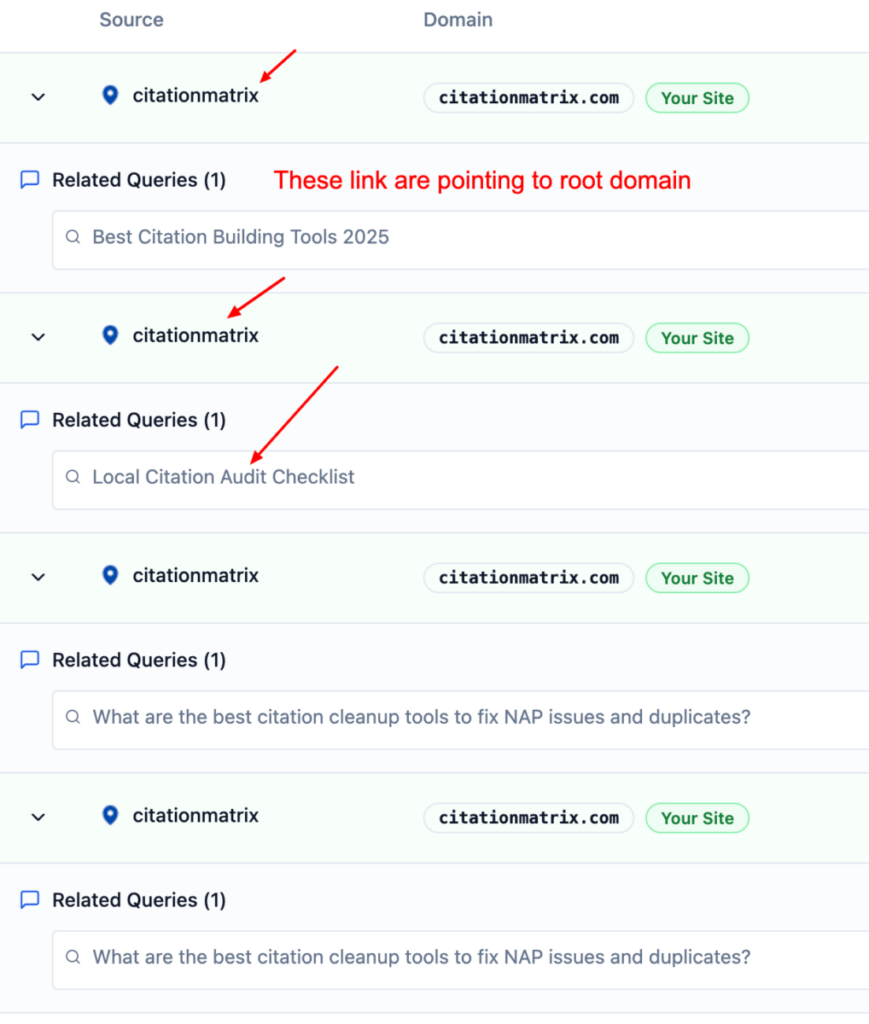

Instead, it sometimes cited the entire domain as if it were an established authority.**

And this happened despite the fact that:

The domain was brand new

No backlinks existed

No historical authority signals existed

No external brand mentions existed

In other words, the model treated a zero-authority domain as if it had domain-level trust.

From these observations, several critical patterns emerged:

Even with no off-page signals, the model created a conceptual representation of the domain.

The structure and internal consistency of the site were enough to trigger domain-level trust.

Entity enrichment, content clustering, consistent formatting, and topical coverage outweighed lack of links.

Cross-linking, consistent headers, and shared entities created a recognizable knowledge graph.

This domain-level citation behavior is commonly seen with long-established sites such as:

Moz

BrightLocal

Whitespark

HubSpot

Semrush

These sites have:

Millions of backlinks

Decades of publishing history

Strong E-E-A-T signals

High editorial authority

Yet our brand-new domain exhibited the same behavior.

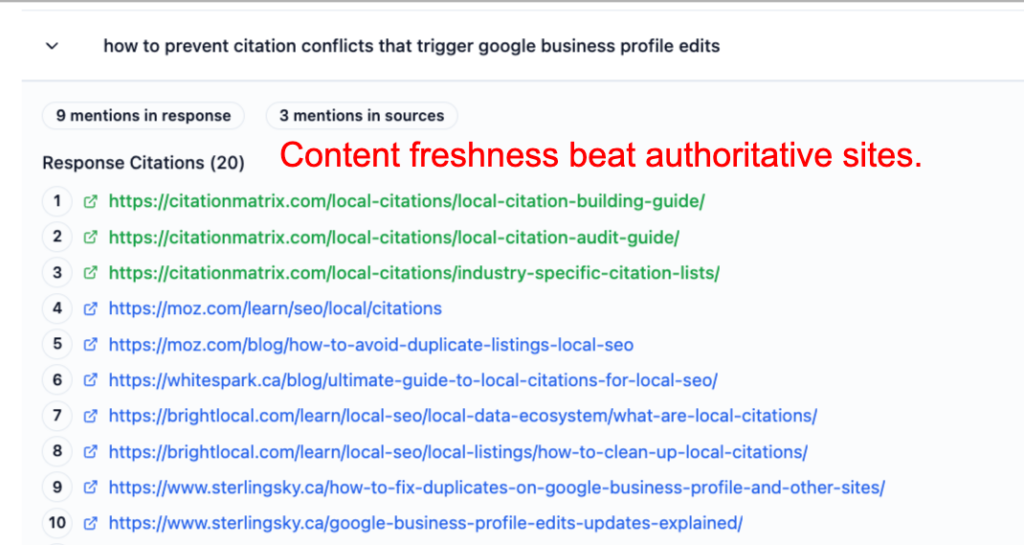

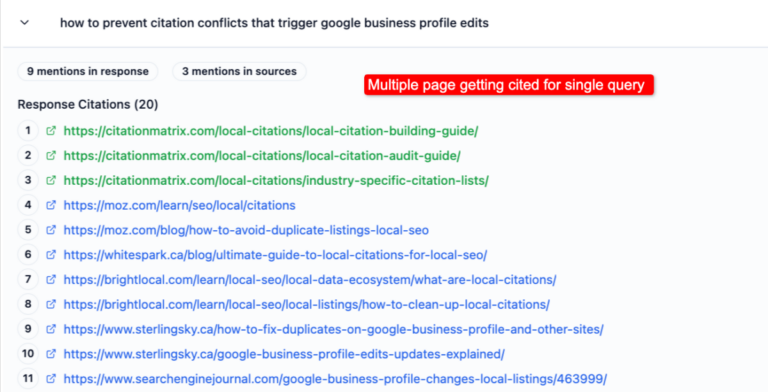

Across multiple LLM platforms, our new domain didn’t just appear —

it often ranked above these established authority sites.

Screenshots show CitationMatrix.com cited:

Above Moz

Above BrightLocal

Above Whitespark

Above Semrush

Multiple times in a single answer

At the top of ChatGPT and Perplexity citation lists

This is not possible under traditional SEO ranking systems.

It demonstrates a fundamental shift:

LLMs prioritized:

Recent, up-to-date content

Dense entity coverage

Deep topical completeness

Semantic clarity and structure

Internal cohesion of the content network

Micro-snippets suitable for extraction

Clean, consistent formatting

These signals outweighed:

Backlinks

Domain age

Domain Rating

Historical trust

Legacy brand power

This finding is transformative for AI SEO:

LLMs reward semantic authority, not link authority.

LLMs reward knowledge structure, not domain history.

Your domain was effectively treated as a topical expert, even in its first few days online.

Across many queries:

This means:

This is impossible under traditional SEO models but completely logical under LLM retrieval models.

Backlinks are a human-designed proxy for trust.

LLMs don’t need them—they read the content directly.

Our fresh domain ranked in:

…within hours.

When content is:

LLMs form a domain-level mathematical representation.

This allows:

Because LLMs think in vectors—not keywords:

…all map back to the same domain cluster.

This is why you repeatedly saw two pages surface.

Early impressions confirm:

To maintain scientific integrity:

This study disproves long-held SEO assumptions:

No, LLMs do NOT require backlinks

No, LLMs do NOT require backlinks No, LLMs do NOT rely on domain age

No, LLMs do NOT rely on domain age No, LLMs do NOT follow Google’s authority systems

No, LLMs do NOT follow Google’s authority systemsInstead, LLMs reward:

Semantic clarity

Semantic clarity Topical depth

Topical depth Entity richness

Entity richness Structural coherence

Structural coherence Domain-level identity

Domain-level identityWe achieved:

The future of ranking is no longer link-based.

It is semantic-based.

This experiment marks the beginning of a new discipline:

Disclaimer:

LLM SEO is still in its early stages.

These findings reflect what we observed from our own website experiment, but different websites or industries may see different patterns.

AI models change fast, so treat these insights as a snapshot of how LLMs behaved at the time of testing—not a permanent rulebook.

© LLMClicks.ai All Right Reserved 2026.