AI Visibility in 2026: How to Get Your Brand Seen by LLMs Like ChatGPT, Gemini & Perplexity

By 2026, many customers will stop searching and start asking. Instead of scrolling traditional Google results, they’ll rely on answers from ChatGPT, Gemini, and Perplexity to decide which brands to trust. This shift is already visible in how AI-generated summaries are replacing link-heavy search results and reshaping discovery.

That change creates a new kind of risk. AI systems don’t just list websites. They summarize brands. Your product, pricing, or positioning can be condensed into a few sentences before a user ever visits your site, or worse, without them visiting at all. As we’ve explained in our guide on what AI visibility really means and how it differs from SEO, being mentioned inaccurately can be more damaging than not being mentioned at all.

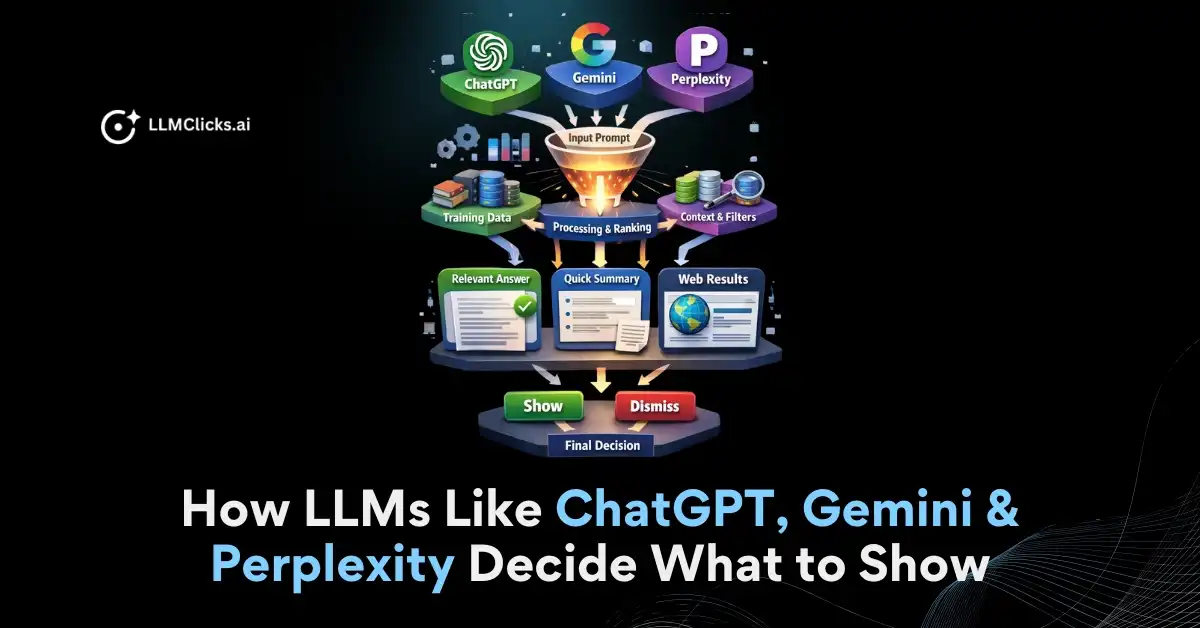

This guide explains what AI visibility really means in 2026, how large language models decide which brands to surface, and what you can do to make sure your brand is accurately represented in AI-generated answers. We’ll also build on concepts covered in our breakdown of how LLMs like ChatGPT, Gemini, and Perplexity decide what to show, so you understand the logic behind AI selection, not just the tactics.

You’ll learn practical strategies to improve visibility across ChatGPT, Gemini, and Perplexity, and how tools like LLMClicks.ai help teams track AI mentions, detect misinformation, and monitor brand accuracy across LLMs. The goal isn’t just visibility, but ensuring AI systems understand your brand correctly as generative search becomes the default discovery layer.

What “AI Visibility” Really Means in 2026

To understand why this shift matters, it’s important to clarify what AI visibility actually means in 2026.

AI visibility is no longer about how high your website ranks on a search results page. It refers to how well AI systems like ChatGPT, Gemini, and Perplexity can understand, trust, and reuse your information when generating answers. As AI Overviews and conversational responses replace traditional link lists, brand discovery increasingly happens inside the answer itself.

In practice, AI visibility shows up in three main forms: mentions, citations, and summaries. A mention is when an AI names your brand or product. A citation is when it references your website or content as a source. A summary is when AI condenses your offering, expertise, or positioning into a short explanation that shapes user perception, often without a click.

This is where AI visibility differs from traditional SEO. SEO rankings are designed to win clicks by appearing higher in search results. AI mentions work differently. They’re about being included in the generated response itself. A brand can rank well organically and still be ignored by AI, while another brand with clearer structure, stronger authority, or better context is surfaced and summarized instead.

How this visibility works also varies by platform. ChatGPT tends to favor authoritative sites, clear entity definitions, and well-structured content. Google Gemini and AI Overviews rely heavily on Google’s signals around expertise, freshness, and structured data. Perplexity places more emphasis on citations and community-driven sources such as forums and Reddit. Optimizing for just one system is no longer sufficient.

The key takeaway is simple: AI visibility is not a single channel. It’s an ecosystem spanning multiple AI platforms, content formats, and authority signals. Brands that recognize this shift and adapt their strategy will be the ones AI systems consistently surface, cite, and trust.

How LLMs Like ChatGPT, Gemini & Perplexity Decide What to Show

While each AI platform behaves slightly differently, large language models follow a similar core process. They combine what they’ve learned during training with real-time web information, then synthesize that data into a single, conversational answer. The goal isn’t to list sources, but to predict the most helpful response based on authority, relevance, and clarity.

What changes between platforms is which signals they trust most and how they present information.

1. How ChatGPT Chooses Information

ChatGPT prioritizes clear understanding over link placement. In many cases, it summarizes information directly instead of sending users to websites.

It tends to favor:

- Authoritative websites with clear expertise

- Well-structured content that answers questions directly

- Strong entity signals (who the brand is, what it does, how it’s positioned)

- Consistent facts across multiple trusted sources

Because ChatGPT often delivers answers without links, brand visibility here depends heavily on how accurately your content can be interpreted and summarized, not just whether it ranks.

2. How Google Gemini & AI Overviews Decide What Appears

Gemini and Google AI Overviews are closely tied to Google’s existing understanding of the web.

They strongly reward:

- Pages that are well indexed by Google

- Clear demonstrations of E-E-A-T (experience, expertise, authority, trust)

- Structured formats such as FAQs, tables, and schema markup

- Fresh, updated content, especially for products and comparisons

Unlike traditional SERPs, AI Overviews may pull short explanations from multiple sources and blend them into a single answer. This means being structurally clear and technically sound matters as much as ranking.

3. How Perplexity Sources and Presents Answers

Perplexity behaves more like a citation-first research assistant.

It places higher weight on:

- Explicit citations and source links

- Community-driven platforms like Reddit and forums

- Concise, factual explanations that can stand alone

- Content that clearly supports claims with references

Perplexity is less about brand polish and more about verifiability. If your content is frequently cited, well-explained, and discussed in trusted communities, it has a much higher chance of appearing.

Although these platforms differ in presentation, they share one core principle:

LLMs surface content they can easily understand, trust, and reuse.

AI visibility isn’t about optimizing for one model. It’s about creating content and signals that work across an ecosystem where authority, structure, clarity, and consistency determine what gets shown.

Content Optimization Strategies to Get Seen by LLMs

Getting visibility in AI-generated answers starts with how your content is written, structured, and maintained. LLMs don’t “read” pages the way humans do. They extract clear, well-defined passages that can be confidently reused in summaries. The goal is to remove ambiguity and make your content easy to interpret at a glance.

1. Be Direct, Factual, and Easy to Summarize

LLMs strongly favor content that answers questions immediately. Long introductions, keyword-heavy filler, and vague positioning reduce the chances of your content being reused.

Best practices:

- Put the core answer in the first paragraph

- Write short, focused explanations (2 – 4 sentences)

- Use plain, factual language over marketing claims

- Avoid unnecessary preambles before addressing the topic

Think in terms of passage-level clarity. Each section should stand on its own and still make sense if extracted independently.

2. Structure Content for AI Understanding

Clear structure is one of the strongest signals LLMs rely on to understand meaning and relevance.

How to structure content effectively:

- Use a logical heading hierarchy (H1 → H2 → H3)

- Frame sections around questions users actually ask

- Add FAQ-style blocks for common queries

- Use bullet points, numbered lists, and tables where possible

- Write clear product and business descriptions that remove confusion

Well-structured content helps AI systems identify topic shifts, key points, and definitions without guesswork.

3. Use Language That Builds Authority and Trust

AI systems prioritize content that sounds credible, natural, and confident, not overly optimized.

Focus on:

- Conversational, human-readable language

- Clear explanations without heavy jargon

- Supporting claims with data, examples, or expert attribution

- Using related terms and synonyms to provide semantic context

This improves semantic clarity and signals expertise, which plays a key role in how LLMs evaluate trustworthiness.

4. Ensure Technical Accessibility for AI Crawlers

Content cannot surface if AI agents can’t access it reliably.

Key technical checks:

- Confirm AI crawlers are not blocked in robots.txt

- Maintain a clean, up-to-date XML sitemap

- Avoid unnecessary noindex tags on important pages

- Ensure fast load times and accessible page structure

Technical accessibility ensures your content can be pulled into an AI’s working context when generating answers.

5. Keep Content Fresh to Reduce AI Errors

Freshness is critical for accuracy. Outdated pages are a common source of AI hallucinations, especially for pricing, features, and comparisons.

To reduce risk:

- Regularly update high-impact pages

- Clearly display “Last updated” dates where relevant

- Remove outdated claims or deprecated features

- Refresh statistics and examples

Updated content helps AI systems cross-verify information and reuse it with higher confidence.

Optimizing for LLMs is less about keyword placement and more about clarity, structure, authority, and freshness. When your content is easy to extract, factually consistent, and technically accessible, AI systems are far more likely to surface your brand in trusted answers.

Authority Building Beyond Your Website (Critical for 2026)

In 2026, AI systems do not rely on your website alone to judge credibility. They cross-check information across the web to confirm authority, consistency, and trust. Brands that invest only in on-site optimization miss a major part of how LLMs decide what to surface.

Authority is now built across an ecosystem, not a single domain.

1. Earn Mentions From Trusted Sources

LLMs place higher confidence in brands that are referenced by independent, authoritative sources.

Focus on earning mentions from:

- Wikipedia, where applicable, for factual brand context

- Industry publications that regularly appear in AI citations

- High-authority blogs and research-driven sites

These mentions act as external validation. When multiple trusted sources describe your brand consistently, AI systems are more likely to reuse and cite that information.

2. Engage Where AIs Learn: Reddit, Forums, and Communities

Community platforms play a disproportionate role in AI visibility, especially for systems like Perplexity and Google AI Overviews.

Why Reddit matters:

- Frequently cited as a source for real-world opinions

- Covers use cases, comparisons, and troubleshooting

- Signals authenticity and experience

Thoughtful participation matters more than promotion. Clear explanations, honest answers, and problem-solving discussions increase the likelihood that your brand is referenced when AI models summarize community knowledge.

3. Strengthen Brand Entity Consistency Across the Web

AI models rely on entity recognition to understand who you are and what you do. Inconsistent descriptions create confusion and reduce trust.

Ensure the same core brand description appears across:

- Your website

- LinkedIn company page

- Crunchbase and similar profiles

- Business directories and listings

Consistency helps AI systems connect mentions across platforms and confidently attribute information to the correct brand. Conflicting messaging, outdated descriptions, or unclear positioning increase the risk of omission or misrepresentation.

Technical & Ecosystem Factors That Influence AI Visibility

AI visibility is shaped as much by infrastructure and signals as by content itself. Even high-quality pages can be ignored if AI systems struggle to access, interpret, or validate them. This is where technical foundations and ecosystem presence play a decisive role.

Site Performance and Accessibility

AI systems favor content they can retrieve quickly and reliably.

Key factors that influence visibility:

- Clean crawlability and indexing for AI bots such as GPTBot and Google-Extended

- Logical site architecture with minimal reliance on heavy client-side JavaScript

- Fast load times and strong Core Web Vitals

- Secure HTTPS implementation

Slow, inaccessible, or poorly structured sites are crawled less frequently and cited less often in AI-generated answers.

Clean Internal Linking and Semantic Structure

Internal linking helps AI systems understand topic relationships and importance across your site.

Best practices include:

- Clear, descriptive internal anchor text

- Logical linking between related pages and clusters

- Proper heading hierarchy using semantic HTML (H1, H2, H3)

This improves context extraction and increases the likelihood that individual passages are reused in AI summaries.

Citations and References Inside Content

AI systems are more confident summarizing content that is verifiable.

Including:

- References to trusted sources

- Data-backed statements

- Clear attribution for claims and statistics

helps AI models cross-check information and treat your content as reliable. This is especially important for comparison, pricing, and decision-oriented topics.

Multi-Platform Presence Beyond Your Blog

AI models pull information from a distributed web, not just standalone blogs.

Visibility improves when your brand appears consistently across:

- Authoritative publications and research sites

- Community platforms and forums

- Knowledge databases and directories

Each AI system sources the web differently. Being present across multiple platforms increases the chances your brand is selected, summarized, or cited.

How to Measure and Monitor AI Visibility in 2026

Understanding AI visibility is only useful if you can measure it consistently. In 2026, relying on intuition or occasional checks is no longer enough. AI-generated answers change frequently, vary by prompt, and differ across platforms.

Why Manual Checking Doesn’t Scale

Manually typing prompts into ChatGPT or Perplexity gives only a partial and misleading view.

Key limitations:

- Results vary by time, location, and model version

- You can only test a small number of prompts

- There’s no historical tracking or trend analysis

- Subtle changes in wording can produce different answers

What looks accurate today may be incorrect tomorrow, and manual checks won’t surface those shifts in time.

Why Google Analytics Alone Is Not Enough

Google Analytics can show click-through traffic from AI platforms, but it misses most of what matters.

What GA can’t tell you:

- Where your brand is mentioned without a link

- Which prompts trigger AI visibility

- How competitors are positioned

- Whether AI is describing your brand correctly

Since many AI answers are zero-click, relying on traffic data alone creates blind spots.

What You Actually Need to Track in 2026

Effective AI visibility monitoring focuses on three core questions:

- Where does your brand appear?

Across which AI platforms, prompts, and answer types - How is your brand described?

Positioning, features, pricing, and comparisons - Is the information accurate?

Detection of outdated details, hallucinated features, or brand confusion

Tracking these dimensions helps you understand not just presence, but impact.

The Shift Toward AI Visibility Monitoring Tools

As AI becomes a primary discovery layer, brands are turning to dedicated tools that monitor visibility across multiple LLMs, track changes over time, and flag inaccuracies early. Platforms like LLMClicks.ai are built to surface how AI systems interpret your brand and highlight where corrections are needed before misinformation affects trust or revenue.

How LLMClicks.ai Helps Brands Improve AI Visibility

As AI platforms increasingly shape brand discovery, many teams face the same challenge: they don’t know how AI systems actually interpret their brand.

LLMClicks.ai helps close that gap by showing how brands appear across AI platforms and where interpretation issues occur. Instead of focusing only on visibility, it highlights whether AI-generated descriptions are clear, consistent, and accurate.

Where LLMClicks.ai Is Most Useful

LLMClicks.ai helps teams:

- Track brand mentions across ChatGPT, Gemini, and Perplexity

- Identify misinformation or hallucinated details

- Understand how AI summarizes products, features, and pricing

- Improve content structure to reduce ambiguity

This makes it easier to act on AI visibility issues before they affect perception or decisions.

Who Benefits Most

- SaaS companies, where small inaccuracies can impact conversions

- Agencies, managing AI visibility for multiple clients

Brands with complex offerings, where clarity is essential

AI Visibility Checklist for 2026

Use this checklist to evaluate whether your brand is ready to be surfaced, summarized, and trusted by AI systems in 2026.

- Clear brand entity description: Your brand has a consistent, unambiguous description explaining who you are, what you do, and who you serve.

- Structured, answer-first content: Key pages include FAQ-style sections that directly answer common questions in simple, factual language.

- Schema markup in place: Relevant schema (FAQ, Article, Product, Organization) is implemented to help AI understand content context and relationships.

- Authoritative off-site presence: Your brand is mentioned consistently across trusted sources such as industry publications, communities, and knowledge platforms.

- Regular accuracy checks of AI answers: AI-generated summaries of your brand are reviewed periodically to catch outdated or incorrect information.

- Visibility tracking across multiple LLMs: Brand presence is monitored across ChatGPT, Gemini, Perplexity, and other AI platforms, not just one system.

Conclusion: AI Visibility Is About Being Understood, Not Just Seen

Ultimately, AI will increasingly mediate how people discover, evaluate, and trust brands. As ChatGPT, Gemini, and Perplexity become default answer engines, visibility alone is no longer enough. Being surfaced with inaccurate or unclear information carries real risk, while brands that prioritize clarity and authority gain a compounding advantage over time.

The brands that win in 2026 will be those that invest early in being understood correctly, by structuring content for AI, maintaining consistent entity signals, and validating how their brand is summarized across platforms. This is where tools like LLMClicks.ai help close the gap between visibility and understanding, turning AI discovery into something measurable and manageable.

Moving forward, the question isn’t whether AI will influence brand discovery. It already does. The real question is whether your brand is ready to be trusted by the systems shaping those answers.

Frequently Asked Questions (FAQs)

Q1. What is AI visibility in 2026?

Ans: AI visibility in 2026 refers to how well a brand is understood, trusted, and surfaced by AI systems like ChatGPT, Gemini, and Perplexity when they generate answers. It focuses on mentions, citations, and accurate summaries rather than traditional search rankings.

Q2. How is AI visibility different from SEO?

Ans: SEO focuses on ranking web pages in search engine results to earn clicks. AI visibility focuses on whether a brand appears inside AI-generated answers, even when no link is shown. A brand can rank well in Google but still be absent from AI responses.

Q3. How do ChatGPT, Gemini, and Perplexity decide which brands to show?

Ans: These platforms analyze authority, clarity, and consistency across the web. ChatGPT favors structured and authoritative content, Gemini relies heavily on Google’s E-E-A-T signals, and Perplexity prioritizes clear citations and community sources like Reddit.

Q4. Why is accuracy important for AI visibility?

Ans: Accuracy matters because AI systems can summarize or compare brands without users visiting the website. Incorrect pricing, features, or positioning in AI answers can reduce trust and negatively impact buying decisions.

Q5. How can brands improve AI visibility?

Ans: Brands can improve AI visibility by creating clear and structured content, using schema markup, maintaining consistent brand descriptions across platforms, earning authoritative mentions, and regularly updating content to prevent outdated information.

Q6. How can AI visibility be tracked or monitored?

Ans: AI visibility can be monitored by tracking brand mentions, citations, and summaries across multiple LLMs. Dedicated AI visibility tools help identify where a brand appears, how it is described, and whether the information is accurate.