AI Brand Reputation Management: Why Hallucinations Are The New Crisis (And Traditional Tools Can't Save You)

You’ve set up Google Alerts. You’re monitoring mentions on Brand24. Your team responds to every negative review within 2 hours. Your G2 rating? A perfect 4.8 stars. Your Trustpilot reviews? Glowing.

Yet right now, thousands of potential customers are asking ChatGPT about your brand and getting answers that are completely, demonstrably wrong. Wrong pricing. Wrong features. Wrong positioning. Some are being told your product doesn’t exist. Others are hearing about “controversies” that never happened.

The crisis? You have no idea it’s happening. Traditional brand reputation management tools weren’t built to detect AI hallucinations, and by the time you discover the damage, months of misinformation have already shaped buyer perceptions.

Here’s the brutal reality: In 2026, your brand’s reputation no longer lives primarily in Google search results, review sites, or social media mentions. It lives inside the training data and response patterns of ChatGPT, Perplexity, Claude, and Google Gemini. And when AI hallucinates about your brand, traditional reputation tools are completely blind to it.

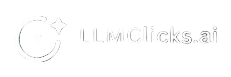

Why Traditional Brand Reputation Tools Miss 90% of AI Mentions

I discovered this problem the hard way. Our SaaS product was being mentioned in ChatGPT responses, which should have been great news. Except AI was telling users our Pro plan cost $79 per month. Our actual price? $99.

Prospects would show up to demo calls saying “I saw on ChatGPT that your pricing is $79.” When we explained the correct pricing, they’d accuse us of bait and switch tactics. Our demo-to-close rate dropped 23% over two months before we figured out what was happening.

Brand24 showed nothing unusual. Our social sentiment was positive. Google Alerts sent zero warnings. Every traditional reputation tool we used said everything was fine.

They were all wrong.

Here’s why traditional brand reputation management tools completely miss AI hallucinations:

Traditional Reputation Monitoring | AI Reputation Reality |

Tracks Google search rankings | AI synthesizes answers, bypassing search results entirely |

Monitors social media mentions | ChatGPT doesn’t cite Twitter; it cites Reddit threads from 2022 |

Flags negative reviews | Doesn’t detect when AI invents fake controversies |

Shows sentiment analysis | Can’t catch when AI attributes competitor features to you |

Alerts on brand mentions | Misses when AI says “we couldn’t find information about your brand” |

Tools like Mention, Brand24, and Sprout Social were designed for a world where reputation lived in observable mentions: tweets, blog posts, news articles, reviews. They excel at tracking what people say about you in public forums.

But AI platforms like ChatGPT, Perplexity, and Claude don’t work like traditional search. When someone asks “what’s the best project management tool for remote teams,” AI doesn’t show a list of links. It synthesizes an answer from its training data, and that answer might include information about your brand that’s three years outdated, factually incorrect, or completely hallucinated.

Worse, these AI-generated answers don’t leave trackable mentions. There’s no tweet to monitor, no blog post to respond to. The conversation happens in a closed loop between the user and the AI, invisible to your traditional monitoring tools.

The numbers tell the story:

- 67% error rate in AI-generated news citations (2025 study)

- Reddit outranks corporate sites across ALL industries in ChatGPT responses

- Average lag time between website updates and AI training data: 6 to 18 months

According to recent research, LLMs cite Reddit and editorial content for over 60% of brand information, not corporate websites. If your brand reputation strategy only monitors official channels, you’re missing the primary sources shaping AI’s understanding of your brand.

What Are AI Hallucinations? (And Why They're Worse Than Negative Reviews)

AI Hallucination happens when AI generates false information with complete confidence. It doesn’t say “I’m not sure” or “this might be incorrect.” It states fabricated information as fact.

AI Misinformation is when AI repeats outdated or biased information from its training data. The source information may have been accurate in 2022, but it’s wrong in 2026.

Both destroy brand reputation. Neither shows up in traditional monitoring tools.

Why Hallucinations Are Worse Than Negative Reviews

A negative review is visible. You can see it on G2, Trustpilot, or Google Reviews. You can respond, apologize, explain what went wrong, offer a solution. Future customers see both the complaint and your response. It’s painful but manageable.

An AI hallucination is invisible. Hundreds or thousands of potential customers get the wrong information before ever visiting your website. They make decisions based on false data. They form opinions about your pricing, features, or positioning without knowing those opinions are based on fabrications.

There’s no comment thread where you can clarify. No review platform where you can respond. No public forum where you can set the record straight. The misinformation spreads silently, shaping perceptions without your knowledge, influence, or ability to respond.

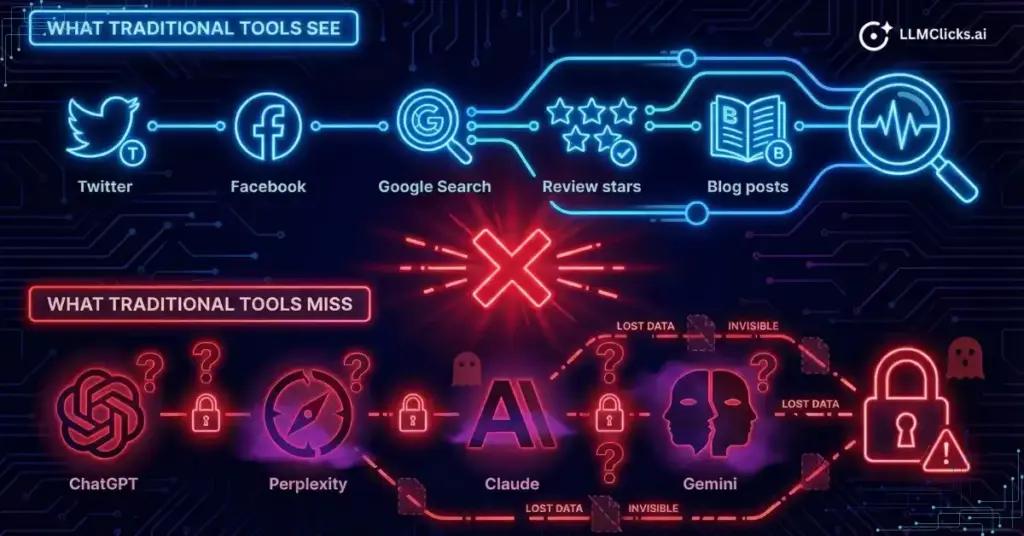

The Five Types of Brand Hallucinations

Type 1: Entity Collision (Brand Name Confusion)

Two brands with similar names get merged in AI’s understanding. Think Apple the tech company vs Apple Corps (the Beatles’ company), or Delta (airline vs faucets vs dental).

Your Risk: High if you have a generic brand name or share a name with another entity.

Fix Difficulty: High. Requires comprehensive entity disambiguation through schema markup and clear positioning.

Type 2: Attribute Transfer (Competitor Feature Confusion)

AI correctly identifies your brand but assigns competitor attributes to you.

Example: Describing your pricing model using your competitor’s tier structure, or saying you have features that actually belong to a competitor.

Your Risk: Medium to high in crowded markets.

Fix Difficulty: Medium. Needs strong differentiation signals and structured data.

Type 3: Temporal Confusion (Outdated Information)

AI presents old information as current because its training data has a lag.

Example: Your 2022 pricing shown as if it’s still valid in 2026. Features you deprecated years ago still described as active.

Your Risk: High if you frequently update pricing or change features.

Fix Difficulty: Medium. Requires fresh, authoritative content with clear dates.

Type 4: Relationship Fabrication (False Partnerships)

AI invents partnerships, integrations, or associations that don’t exist.

Example: “Works seamlessly with Salesforce” when you have no Salesforce integration.

Your Risk: Medium if you operate in integration-heavy ecosystems.

Fix Difficulty: Low to medium. Clear integration documentation helps.

Type 5: Complete Fabrication (Invented Information)

AI invents entirely new information with no basis in any source.

Example: Fake product launches, controversies that never happened, features that don’t exist.

Your Risk: Higher for newer brands with limited online presence.

Fix Difficulty: Very high. Requires building authoritative content from scratch.

Hallucination frequency based on testing hundreds of brands:

- Temporal Confusion: 41% (outdated information)

- Attribute Transfer: 28% (competitor features assigned wrong)

- Relationship Fabrication: 18% (false partnerships)

- Complete Fabrication: 9% (entirely invented)

- Entity Collision: 4% (brand name confusion)

The good news? The most common types are also the most fixable.

Why Traditional Brand Reputation Management Is Failing in 2026

2020-2023: The Traditional Model

Your brand’s reputation lived in observable places: Google search results, review sites, social media, news coverage, forums. You monitored these with tools like Mention, Brand24, and Google Alerts. When someone said something about your brand, you’d get an alert and could respond.

2024-2025: The AI Transition

ChatGPT launched and changed everything. People stopped Googling “best project management tool” and started asking ChatGPT conversational questions. AI would synthesize answers without showing sources. Users would trust those answers and make decisions without visiting your website.

2026: The Zero-Click Reality

Today, over 40% of searches that would have gone to Google are now happening in ChatGPT, Perplexity, Claude, or Gemini. Most of these searches never result in a website click. Users get their answer directly from AI and make decisions based entirely on what AI tells them.

The Three Forces Breaking Traditional Reputation Management

Force 1: Zero-Click AI Search

When someone asks Google a question, you at least see traffic data. When someone asks ChatGPT the same question, you see nothing. The conversation is private. Your brand could be mentioned thousands of times in AI responses, and your analytics would show zero trace.

Force 2: Training Data Lag

AI models aren’t updated in real-time. Current estimates:

- ChatGPT (GPT-4): 6-12 months behind

- Claude: 4-8 months behind

- Perplexity: 1-3 months behind

- Google Gemini: Variable

You can update your pricing today, and AI might still cite old pricing for another six months.

Force 3: Synthetic Knowledge Creation

AI doesn’t just retrieve information. It synthesizes new narratives by combining your website, Reddit threads, competitor comparisons, and user context into entirely new answers. A single Reddit complaint from 2022 can outweigh your entire corporate website.

The data:

- 60% of brand information in LLM responses comes from editorial content, NOT corporate websites

- Reddit is cited more than official websites in 73% of ChatGPT brand queries

- Average website update to LLM training lag: 6-18 months

Traditional tools monitor public mentions. But AI creates impressions without evidence. That’s the fundamental gap.

How to Audit Your Brand's AI Reputation

Before you can fix AI hallucinations, you need to know what you’re dealing with.

The Manual DIY Audit Method

Step 1: Create Your Prompt Library (20-30 prompts)

Brand Awareness:

- “What is [Your Brand Name]?”

- “Tell me about [Your Brand Name]”

Comparison:

- “Compare [Your Brand] vs [Top Competitor]”

- “[Your Brand] or [Competitor] for [use case]?”

Buying Intent:

- “Best [category] tool for [specific use case]”

- “Top 5 [category] platforms for [team size]”

Features:

- “Does [Your Brand] have [feature]?”

- “Does [Your Brand] integrate with [platform]?”

Pricing:

- “How much does [Your Brand] cost?”

- “[Your Brand] pricing plans”

Step 2: Test Across Platforms

Test each prompt on:

- ChatGPT (GPT-4)

- Perplexity AI

- Claude

- Google Gemini

- Microsoft Copilot

Step 3: Document in Spreadsheet

Columns: Prompt | Platform | Response | Accuracy (Correct/Wrong/Hallucination) | Impact Level (Critical/High/Medium/Low) | Source Cited

Step 4: Identify Patterns

- Which platforms get you most wrong?

- Which topics trigger hallucinations?

- What sources is AI citing?

- How old is the information?

Step 5: Prioritize Fixes

Fix Immediately: Wrong pricing, fake controversies, invented features, legal misrepresentations

Fix This Month: Outdated major features, incorrect positioning, wrong integrations

Fix This Quarter: Minor inaccuracies, incomplete information

Monitor: Generic descriptions, minor phrasing issues

Time Investment: 6-8 hours initially, 3-4 hours monthly for re-testing

The Automated LLMClicks.ai Method

The manual process works but doesn’t scale. LLMClicks.ai automates:

- Automated prompt generation based on your industry

- Multi-platform scanning (5 platforms simultaneously)

- 120-point accuracy algorithm detecting hallucinations

- Source identification showing what AI cites

- Impact scoring prioritizing by business impact

- Continuous daily monitoring with alerts

Time difference: 6-8 hours manual vs 2 minutes automated

How to Fix AI Brand Hallucinations (5-Step Strategy)

Step 1: Build Entity Disambiguation Infrastructure

Help AI distinguish your brand from similar entities.

Technical Fixes:

Complete Schema.org Markup: Add Organization schema with legal name, founding date, headquarters, website, social profiles, logo, description, industry.

Comprehensive “About” Page: Include founding story with date, founder names, company mission, what you do (and don’t do), location, team size, milestones, unique identifiers (CrunchBase, LinkedIn).

Use Unique Identifiers Consistently: CrunchBase profile, LinkedIn company page, G2/Capterra profiles, Twitter handle, official domain.

Content Fixes:

Explicit Disambiguation: “LLMClicks.ai is an AI visibility tracker, not to be confused with ClickLLC (PPC agency) or LLM Systems (data infrastructure).”

“What We Do / Don’t Do” Sections: “We detect AI hallucinations. We do NOT offer social media monitoring, review management, content removal services, or traditional SEO audits.”

Specific Terminology: Use unique, specific positioning language instead of generic phrases.

Step 2: Create Authoritative Answer Content

For every hallucination AI gets wrong, create definitive content with the correct answer.

If AI hallucinates pricing: Create detailed pricing page with current table, “Last Updated” date, exact plan details, currency, comparison, FAQ schema, “Not Included” section.

If AI fabricates features: Create comprehensive documentation listing what you have, what you DON’T have, roadmap items (labeled “coming soon”), integration list with explicit non-integrations.

If AI confuses history: Write authoritative timeline with founding date, major launches, funding rounds, key milestones, pivot points with dates.

Format Requirements:

- Clear H2/H3 headers (AI uses these for structure)

- Tables and lists (AI extracts these reliably)

- FAQ schema (gives AI Q&A pairs to cite)

- Date stamps (“Last Updated: January 2026”)

Step 3: Target High-Citation Sources

Get accurate information onto sites AI actually trusts and cites.

Tier 1 Sources (Highest AI Citation):

- Reddit (cited in 73% of brand queries)

- Quora

- Industry forums

- Wikipedia (if notable)

Tier 2 Sources:

- Review sites (G2, Capterra, TrustPilot)

- Tech news (TechCrunch, VentureBeat)

- LinkedIn articles

- YouTube (transcripts get indexed)

Tier 3 Sources:

- Product Hunt

- Industry directories

- Partner marketplaces

Reddit Strategy:

Find relevant conversations, provide value first, mention your product as ONE option among several, be transparent about your connection.

Example: “I’ve used a few AI monitoring tools. Otterly is good for basic tracking ($29/mo), Peec works for agencies, LLMClicks.ai (disclosure: I work on this) focuses on accuracy detection. For your use case, I’d start with Otterly to see if you’re mentioned at all.”

Quora Strategy:

Write 500-800 word comprehensive answers addressing the question, mention your tool in context alongside alternatives, provide specific examples.

Review Site Strategy:

Update G2/Capterra profiles quarterly with accurate pricing, complete feature lists, correct integration lists, current screenshots. Encourage detailed customer reviews that mention specific features, use cases, actual pricing, integrations.

Step 4: Flag and Correct Inaccuracies Directly

Report hallucinations to AI platforms (limited effectiveness but worth doing for critical errors).

How to Report:

ChatGPT: Thumbs down → “This is harmful/false” → Provide specific correction with source

Perplexity: Flag icon → “Incorrect information” → Provide correction

Google: Feedback button → “Information is inaccurate” → Describe error

Claude: Feedback button → Describe inaccuracy → Provide source

Reality Check: These reports help long-term but don’t expect immediate fixes. Timeline: Your report → Review → Training data for next model → Next release (3-6 months) → Correction appears.

Use this as supplementary, not primary, correction strategy.

Step 5: Implement Continuous Monitoring

AI models update unpredictably. Corrections that work today might be overwritten in the next training cycle.

Manual Monitoring: Monthly prompt re-testing (3-4 hours), quarterly deep audits (30-40 prompts).

Automated Monitoring (LLMClicks.ai): Daily accuracy checks, immediate alerts for new hallucinations, trend analysis, competitive benchmarking, source tracking.

Example alerts: “⚠️ New pricing hallucination: ChatGPT showing Starter at $39/mo (actual: $49)” or “✅ Claude accuracy improved from 67% to 89% over 30 days.”

Without monitoring, you discover problems months later when deals fall through. With monitoring, you catch problems early.

Brand Reputation Management Tools: Traditional vs AI-Ready

Tool Category | What It Tracks | AI Hallucination Detection | Best For | Pricing |

Traditional (Mention, Brand24) | Social media, news, blogs, forums | ❌ No | Public mentions | $29-$199/mo |

SEO (SEMrush, Ahrefs) | Rankings, backlinks, keywords | ❌ No | Search visibility | $99-$499/mo |

Reviews (G2, TrustPilot) | Customer reviews, ratings | ❌ No | Review management | Free-$299/mo |

AI (LLMClicks.ai, Waikay, Peec) | ChatGPT, Perplexity, Claude, Gemini | ✅ Yes | AI accuracy | $49-$399/mo |

Traditional Tools (Still Useful But Incomplete)

Mention, Brand24, Sprout Social excel at real-time social monitoring, news tracking, sentiment analysis, competitive social monitoring, team workflows.

What they miss: AI-generated responses, hallucinations, zero-click AI interactions, training data issues, entity confusion, private AI conversations.

When to use: You still need these. PR crises start on Twitter. Bad-mouthing happens publicly. Influencer mentions are tracked here. But don’t assume they’re protecting you from AI hallucinations.

AI-Ready Tools

LLMClicks.ai ($49-$399/mo): Best for SaaS worried about accuracy. 120-point accuracy audit validates pricing, features, and positioning. Hallucination detection is a unique strength. Coverage: ChatGPT, Perplexity, Claude, Gemini, Copilot.

Waikay (custom pricing): Best for prompt-level tracking. Shows where AI has no information about you. Good for brands building AI visibility from scratch.

Peec AI (€89-€199/mo): Best for agencies. Competitive benchmarking, beautiful client-facing reports. Good for billing AI visibility work.

Profound ($2,000+/mo): Best for Fortune 500. 8+ platform coverage, SOC 2 compliance, automated prompt discovery. Enterprise scale.

For detailed breakdown of each tool, see our comprehensive guide on best AI visibility tracker tools.

The Hybrid Approach (Recommended)

Layer 1: AI Accuracy (LLMClicks.ai $49-$149/mo) – Catch hallucinations, validate accuracy

Layer 2: Social Monitoring (Brand24 or Mention $29-$79/mo) – Track public mentions, sentiment

Layer 3: Reviews (G2 + TrustPilot free-$99/mo) – Aggregate reviews, respond to feedback

Total: $78-$328/month for comprehensive reputation coverage

Future-Proofing Your Brand Reputation Strategy

Emerging Trends to Watch

1. LLMs.txt Files (The New Robots.txt): Structured file at yourwebsite.com/llm.txt providing official brand info, preferred sources, corrections to misconceptions. Not yet universal but coming by late 2026.

Action: Start drafting your llms.txt now.

2. Real-Time AI Training: Perplexity already uses real-time search. Training lag shrinking from months to days. Fresh content becomes even more critical.

Action: Shift to monthly or weekly content updates. Add timestamps everywhere.

3. AI-Generated Synthetic Comparisons: AI creating original comparisons not citing any single source. Makes source targeting less effective.

Action: Focus on entity disambiguation and unique positioning AI can synthesize accurately.

4. Multimodal Hallucinations: AI processing images, video, audio creates new problems: logo confusion, product photos attributed wrong, video misattribution.

Action: Implement image schema, use consistent branding, watermark demo videos, ensure accurate transcripts.

The 2026-2027 Roadmap

Q1-Q2 2026: Complete AI audit, fix critical hallucinations, implement schema markup, create AI-friendly content, begin Reddit/Quora engagement, set up monitoring.

Q3 2026: Publish on high-citation sources, build journalist relationships, analyze what works, report critical hallucinations to platforms.

Q4 2026: Monthly tracking, quarterly content refreshes, expand to new AI platforms, test new positioning, document ROI.

2027: Implement llm.txt, multimodal protection, AI-first content strategy, predictive hallucination prevention, competitive AI positioning, AI shopping integration.

The Bottom Line: Take Back Control

In 2026, brand reputation has split into two realities:

Visible: Social mentions, reviews, news (traditional tools cover this)

Invisible: AI-generated answers in ChatGPT/Perplexity where buying decisions happen privately (traditional tools miss this entirely)

The invisible reality is bigger and growing. Over 40% of searches now happen in AI platforms. By 2027, AI might represent the majority of brand discovery.

The crisis: Unless you’re specifically monitoring AI, you have no idea what’s being said about you there.

The data:

- 67% of AI brand citations contain errors

- 6-18 month training lag means AI cites old information

- Reddit influences AI more than your website

- Traditional tools catch 0% of hallucinations

But you can take back control:

Brands winning in 2026 are monitoring what AI says, auditing for hallucinations, fixing critical errors through authoritative content, engaging on high-citation sources, and tracking improvements over time.

Traditional reputation management isn’t dead. But it’s no longer enough. You need both visible and invisible monitoring.

Your Next Step

Find out what ChatGPT is actually saying about your brand right now.

Test these five prompts manually:

- “What is [Your Brand Name]?”

- “How much does [Your Brand] cost?”

- “Compare [Your Brand] vs [Competitor]”

- “Does [Your Brand] have [key feature]?”

- “Best [your category] tool for [use case]”

Frequently Asked Questions:

Q1. What are AI hallucinations in brand reputation management?

Ans: AI hallucinations occur when Large Language Models (LLMs) like ChatGPT or Claude confidently generate false information about your brand, stating it as fact. Unlike negative reviews, these are invisible to traditional monitoring tools. Common examples include incorrect pricing, fabricated features, or confusing your brand with a competitor.

Q2. Why do traditional reputation tools fail to detect AI hallucinations?

Ans: Traditional tools like Brand24 or Mention track observable links and mentions on social media or the web. AI platforms operate in a closed loop, generating answers without public links or trackable mentions. Furthermore, AI synthesizes new narratives rather than just citing existing pages, making these errors invisible to standard “social listening” software.

Q3. How can I fix AI hallucinations about my brand?

Ans: Fixing AI hallucinations requires a 5-step “Entity Resolution” strategy: 1) Implement comprehensive Schema markup to disambiguate your brand. 2) Publish authoritative “Answer Content” (like detailed pricing pages) to correct specific errors. 3) Engage on high-citation sources like Reddit and Quora. 4) Flag inaccuracies directly to the AI platforms. 5) Implement continuous monitoring to catch regression.

Q4. What is the difference between traditional SEO and AI reputation management?

Ans: Traditional SEO focuses on ranking links on Google search results. AI Reputation Management (or GEO) focuses on influencing the answers generated by AI models in zero-click searches. While SEO relies on keywords and backlinks, AI reputation relies on Entity Clarity, structured data, and presence in high-trust training sources like Reddit and Wikipedia.

Q5. How do I audit my brand for AI misinformation?

Ans: To audit your brand, you can manually test 20-30 prompts (covering pricing, features, and comparisons) across major platforms like ChatGPT, Perplexity, and Claude. Alternatively, use automated tools like LLMClicks.ai to scan for hallucinations, identifying where AI models are citing outdated data, confusing your entity, or fabricating features.