By Shripad Deskhmukh, Founder at LLMClicks.ai

Published on: 10-Feb-2026 | 3300 words | 15-minute read

Ranking on Google used to be the finish line. Today, it’s often just the starting point.

As users turn to ChatGPT, Gemini, Claude, and Perplexity for direct answers, brand discovery is increasingly happening inside AI-generated responses, not on traditional search result pages (SERPs).

This shift changes what “visibility” really means. A brand can rank #1 in Google and still be invisible in an AI answer. Conversely, a brand with lower traditional rankings might be the primary recommendation in ChatGPT because it is “trusted” by the model’s training data.

In many cases, users never click a link at all. They accept the answer they’re given and move on.

In this article, we break down the critical difference between Traditional SEO (optimizing for retrieval) and LLM SEO (optimizing for synthesis). We explain why AI mentions are becoming more influential than rankings, and show how brands can adapt to a world where being referenced by AI matters as much as being ranked by Google.

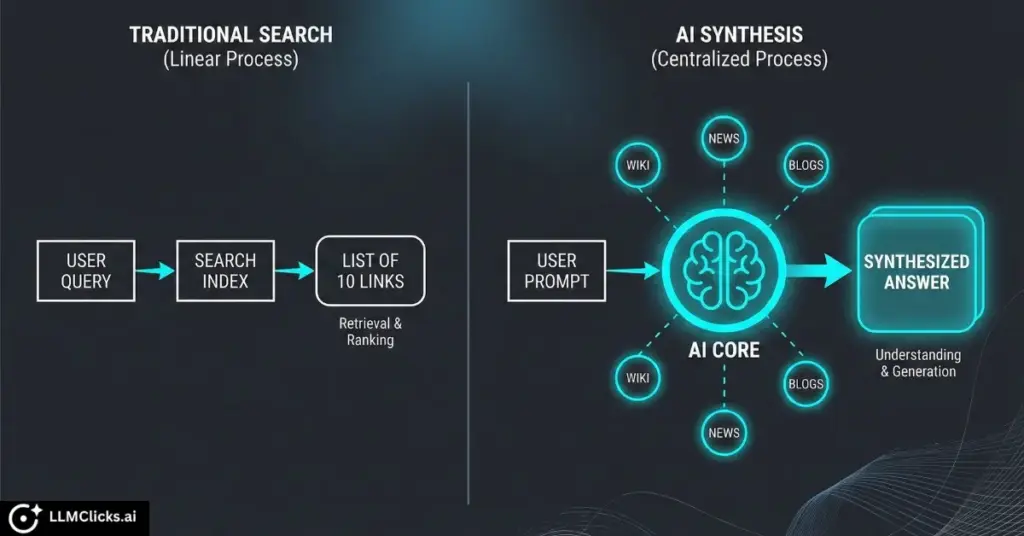

Traditional SEO is the long-established practice of optimizing websites to rank higher in search engine results pages (SERPs) and earn organic traffic. It was designed for a Retrieval Model: users typed queries into Google, scanned a list of blue links, and clicked through to websites to find answers.

At its core, traditional SEO focuses on three primary outcomes:

Traditional SEO relies on a set of well-defined optimization pillars:

Together, these levers help search engines discover, evaluate, and rank pages based on relevance and quality.

LLM SEO (often referred to as LLM Visibility or Generative Engine Optimization) is the measure of how often, where, and in what context your brand, products, or content appear in answers generated by large language models (LLMs) such as ChatGPT, Gemini, Claude, and Perplexity.

Unlike traditional SEO, which operates on a retrieval model (finding the best link), LLM SEO operates on a Synthesis Model. The AI analyzes its vast training data and live search results, then synthesizes a single, direct answer.

In this model, there are no “rankings” in the traditional sense. There is only inclusion or exclusion. If your brand isn’t mentioned in the answer, you are effectively invisible to the user.

LLM visibility is typically evaluated across four key dimensions:

These signals together show your Share of Voice inside AI answers, not just on search result pages.

The shift from “Search” to “Answer” requires a completely different mindset. Traditional SEO and LLM SEO solve different problems. One is designed to win rankings and clicks. The other is designed to win mentions and trust inside AI-generated answers.

Understanding the distinction is critical because optimizing for one does not automatically guarantee success in the other.

Here is how the two strategies compare side-by-side:

Feature | Traditional SEO | LLM SEO (AI Optimization) |

Primary Goal | Rank #1 on a list of links | Be cited/mentioned in the answer |

User Interaction | User clicks a link to read | User reads the answer directly (Zero-Click) |

Core Metric | Organic Traffic & CTR | LLM Visibility & Share of Voice |

Content Focus | Keywords & Word Count | Entities, Context & Facts |

Authority Signal | Backlinks (Quantity/Quality) | Citations & Semantic Proximity |

Competition | Fighting for pixels on Page 1 | Fighting for a sentence in the answer |

Traditional SEO focuses on where your page appears. LLM SEO focuses on whether your brand is included in the answer at all .

User behavior has shifted from browsing links to consuming answers. Instead of comparing multiple websites, people increasingly rely on AI systems to summarize information and guide decisions in a single response.

Traditional SEO relies heavily on backlinks as a proxy for trust. LLM SEO relies on Entity Confidence does the AI know who you are?

LLMs favor content that demonstrates topical depth, not shallow keyword alignment.

To understand why LLM SEO is urgent, we must understand the fundamental shift in user psychology. We are moving from an era of “Search & Sort” to an era of “Ask & Trust.”

In the traditional search model, the user burden was high. A user searching for “best project management software” had to:

This “comparison fatigue” is exactly what AI solves. When a user asks ChatGPT the same question, the AI does the heavy lifting. It aggregates the data, filters out the noise, and presents a synthesized recommendation.

The decline in click-through rates (CTR) isn’t just about Google hiding links; it’s about efficiency.

For brands, this is terrifying but critical. If your marketing strategy relies on users visiting your site to read your “About Us” page, you are optimizing for a behavior that is disappearing. You need to ensure your “About Us” value proposition is delivered inside the AI answer, because that might be the only interaction the user has with your brand.

In this new environment, discovery is no longer driven by ranking alone. It’s driven by whether AI systems choose to include and explain your brand .

AI mentions often influence users before any traffic is generated. When someone asks ChatGPT, Gemini, or Perplexity a question, the first thing they see is not a list of websites but a narrative answer. The brands included in that answer gain immediate exposure and credibility, even if the user never clicks through to a page.

AI summaries don’t just reference brands; they position them. A product can be framed as “best for beginners,” “enterprise-ready,” or “commonly used by agencies” in a single paragraph. That positioning can shape buying decisions long before a user compares pricing pages or feature lists.

The real risk lies in being invisible in AI answers. A brand can perform well in SEO and still be invisible in AI answers. If competitors are consistently mentioned and your brand is not, users may never reach a point where rankings matter. In many AI-driven journeys, there is no second step. The answer is the endpoint.

Is LLM SEO replacing Traditional SEO? No.

They are two sides of the same coin. In fact, most AI models today use a process called Retrieval-Augmented Generation (RAG). They first retrieve information from a search index (like Bing or Google) and then generate an answer.

This means Traditional SEO is still the foundation. You cannot be synthesized if you are not indexed.

AI models prioritize “Tier 1” sources—sites that rank consistently, earn authoritative backlinks, and demonstrate expertise. A strong domain authority (DA) is still a primary signal that tells the AI, “This source is safe to cite.”

If your content isn’t technically accessible, it won’t be retrieved by the RAG process. Core elements like clean site architecture, fast load times, and proper indexing are just as critical for AI bots as they are for Google crawlers.

Evergreen content that performs well in search guides, FAQs, and documentation often becomes the “Ground Truth” for AI models. SEO doesn’t just drive traffic; it supplies the raw material that AI systems summarize.

Winning in an AI-driven environment requires a shift in strategy. You aren’t just writing for humans anymore; you are formatting for machine inference .

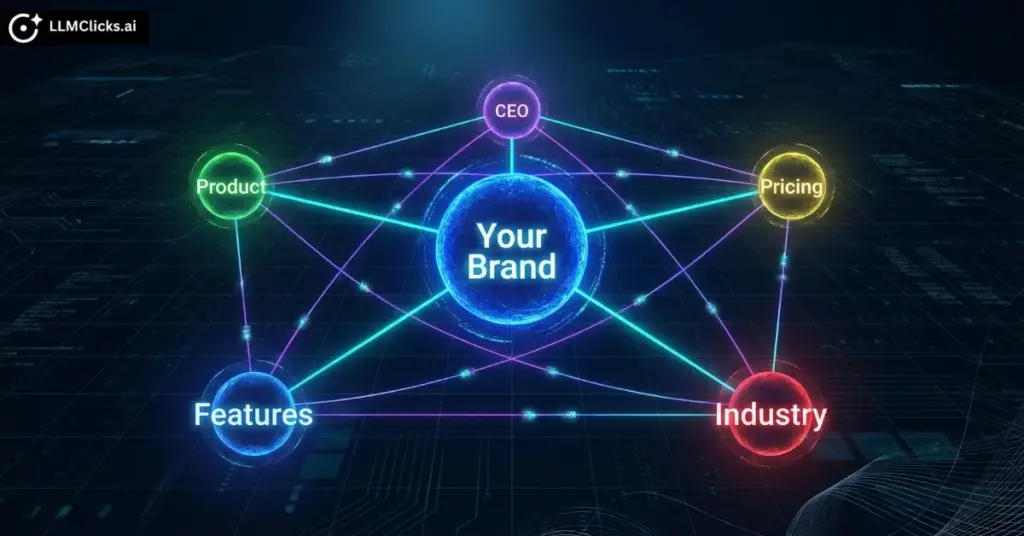

LLMs understand the world through “Entities” (people, places, concepts) and the relationships between them.

AI models love structure. They often extract small sections of content called “passages” rather than full pages. To increase your chances of being cited:

LLMs rely on “Co-Citation.” If your brand is frequently mentioned alongside industry leaders in trusted publications, the AI infers that you belong in that peer group.

Strategy: Get mentioned in “Tier 1” data sources like Reddit, Wikipedia, G2, and authoritative industry news. A mention in a highly-cited Reddit thread can be worth more for Perplexity SEO than a standard backlink.

Most marketers think LLMs just “know” things. In reality, LLM SEO requires optimizing for two very different retrieval methods: Training Data and RAG (Retrieval-Augmented Generation).

This is the information the AI learned during its initial training (e.g., GPT-4’s knowledge cutoff).

This is how Perplexity, Bing Chat, and Gemini work. They search the live web right now to answer a question.

Users now upload PDFs and URLs directly to ChatGPT to “summarize this.”

Not all Answer Engines are the same. A strategy that works for ChatGPT might fail for Perplexity. Here is the nuance:

Format: Video Chapters. distinct timestamps in your YouTube videos allow Gemini to jump to the exact second that answers the query.

You can’t manage what you don’t measure. As you pivot to LLM SEO, your KPI dashboard needs to evolve beyond Google Analytics.

Traffic-only metrics are insufficient because they don’t capture the “Zero-Click” influence of an AI answer.

To understand your true visibility, you need to track:

You can manually test prompts in ChatGPT, but it doesn’t scale. Results change based on user history and model updates. For reliable data, brands use AI visibility tools (like LLMClicks.ai) to automate the testing of hundreds of prompts daily, providing a consistent benchmark of performance .

In Traditional SEO, a high ranking was enough. In LLM SEO, how you are ranked matters more.

Imagine a user asks, “Is [Your Brand] worth the price?”

Both answers might reference your pricing page (same visibility), but Scenario B kills the sale immediately.

This shift from “ranking” to “reputation management” is why we call it LLM Visibility, it’s about being seen correctly, not just being seen.

Is LLM SEO replacing Traditional SEO? No. It’s an expansion.

Traditional SEO builds the infrastructure: the authority, the technical health, and the reach. LLM SEO ensures that authority is translated into the new language of AI mentions, citations, and trust.

The brands that win in 2026 won’t choose one over the other. They will combine both to stay visible across SERPs and AI-driven answers.

The question isn’t whether AI will mediate discovery, it already does. The real question is whether your brand is being understood accurately where those answers are formed.

Ans: Traditional SEO focuses on ranking web pages in search engines to drive clicks (Retrieval). LLM SEO (or LLM Visibility) focuses on optimizing content so it is cited, summarized, and trusted in direct answers by AI models like ChatGPT (Synthesis).

Ans: Yes. Most AI models use “Retrieval-Augmented Generation” (RAG), meaning they rely on search indexes to find information. Strong Traditional SEO ensures your content is indexed and “retrievable” by the AI before it can be synthesized.

Ans: AI tools prioritize “Information Gain,” clarity, and semantic relevance. A page ranking #15 on Google might be cited by ChatGPT because it answers the specific question more directly and concisely than the #1 ranking page .

Ans: To audit visibility, run a series of branded and non-branded prompts across major AI models (ChatGPT, Gemini, Perplexity). Analyze the responses for Frequency (are you there?), Sentiment (is it positive?), and Accuracy (is it true?). Tools like LLMClicks.ai can automate this process.

Ans: Focus on Entity Density (clear definitions of who you are), Formatting (using tables and lists for easy extraction), and Contextual Authority (getting cited in trusted sources like Reddit and G2).

© LLMClicks.ai All Right Reserved 2026.